Welcome, Robot Overlords

To the glee of a few, and the disappointment of many, I get AI's help with some programming.

One of the big challenges in 2025 has been effectively rebuilding my business Audacious Software, as the Trump administration wages war against my field generally - scientific research - and against many of my clients: Northwestern, Harvard, Columbia, Cornell, University of Pennsylvania, and the University of California.

While I start every year with the promise that this one will be the one where I finally get my shit together, 2025 is 100% about survival and sticking around longer than the stupid and cruel regime in power.

As part of that survival strategy, I've been hard at work diversifying my client base, both domestic and abroad. I'm in the final stages of a project that I picked up from a public university, and it's been illuminating about the future of Software Development and AI in ways that the endless commentary around the subject has not been.

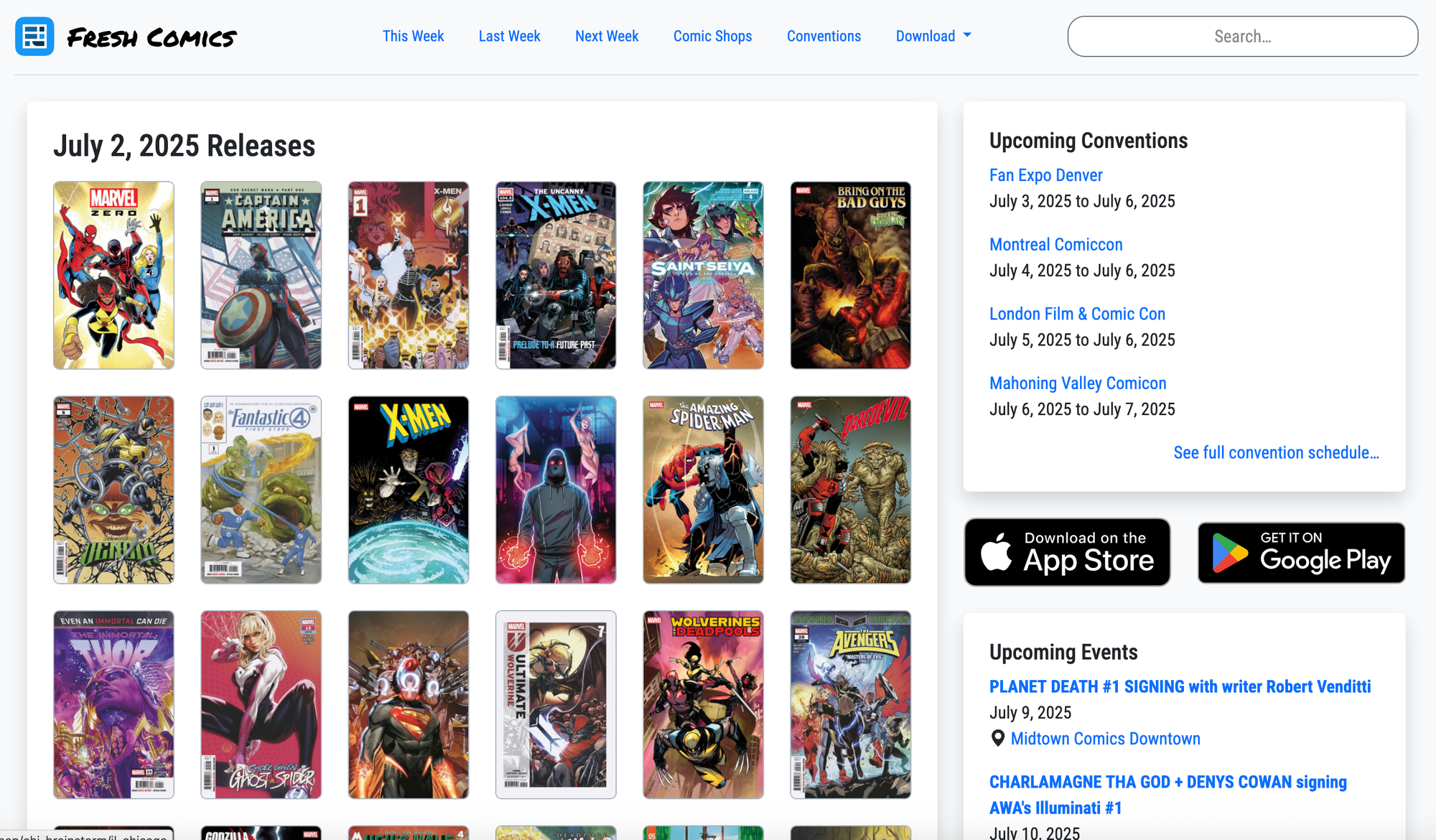

To give a bit of context before we jump into the meat and potatoes, when it comes to building non-trivial networked systems, there are two schools of thought: monoliths vs. micro-services. The monolith guys (and that includes me) tend to build their system in as few of frameworks as are necessary, where components of the system are pretty tightly coupled to each other. To give a good example of this, over the past couple of weeks, I upgraded Fresh Comics from a Django code base that was over a decade old into a more modern Django project.

Fresh Comics consists of the following different technologies working together:

- Django - provides the bulk of the functionality and this is where most of the functionality lives: content management, accounts and authentication, mobile app APIs, etc.

- Postgres - provides the database where Django stores its content.

- Bootstrap - provides the building blocks for Django to render attractive pages in HTML and CSS. Django is the "backend" you don't see, and Bootstrap is the "frontend" you do.

- jQuery - provides some additional tools that makes writing JavaScript easier (and safer).

- Mapbox - provides map views for comic shops and conventions on pages.

- Solr - provides searching and indexing for over a quarter million objects so visitors can find what they're looking for quickly.

- Redis - caches rendered web pages and other content to keep Django from doing the same work repeatedly when an item becomes popular in a short period of time.

- nginx - serves the Django content to the world, and shares static files, and serves as the first line of defense against abusive bots.

- Umami - serves as a self-hosted equivalent of Google Analytics, which allows me to observe user behavior without that data being fed into a web monopolist.

Despite being a fairly monolithic traditional web application, I count nine distinct technologies that work together to implement the site. That said, the vast majority of the special functionality that makes the site work is within the Django framework. The other technologies complement Django in various ways, but 99% of the time I spent originally creating the site (and updating it recently) was writing Python, Bootstrap, or jQuery code to be served as a Django web application.

Now, in the micro-services approach, instead of all of that functionality residing within Django, a web application is unapologetically a collection of smaller web sites, each specializing in one thing, that communicate with each other to create something larger. To use a Transformers analogy, monolithic apps are like Titans like Metroplex, where micro-services are Combiners that join together to form larger figures like Devastator.

The micro-services approach is popular for several reasons:

- It encourages code modularity by wrapping areas of concern into different component servers.

- Developers aren't locked into using one language for a project. If Ruby works well one part of the site, and Python works better for another, you can have the best of both worlds at the cost of some additional communication complexity.

- In larger institutions, where "the system" is larger than any single programmer can manage to keep in their head, micro-services provide a natural level of abstraction where different teams can work on their sections of the system without the risk of bugs from one section spilling into another.

- It's easier to sell software services as micro-services than all-in-one hosting packages, especially for parties that don't want to be responsible for managing servers.

While I'm in the monolith camp, even Fresh Comics has a hint of micro-services in it, like how it uses the Java-based Solr server to provide searching and indexing features. The difference between the camps are more of a continuum than a binary either/or choice.

Anyways, this new project is a preexisting one where the original creators built it as a micro-services app on Amazon's cloud offerings. To break it down, it uses...

- React - provides the participant-facing website.

- S3 - stores the React files as well as raw data gathered from participants.

- DynamoDB - provides database features for storing structured data.

- Cloudfront - serves the S3 files to the web and exposes the various APIs implemented as containers or Lambdas.

- Lambdas - provides a small front-end to scripts - in this case, Python - so that they can be accessed as web APIs.

- Cognito - provides user account management and signup.

- Elastic Container Registry - provides a place to store containers when not in use.

- Elastic Container Service - encapsulates small servers to perform tasks that Lambdas can't on their own. In this case, we have one running Python code, and other one running R code for analysis.

- Batch - starts and stops the containers when needed.

- EventBridge - generates events when data is uploaded to S3 to signal to the Batch job queues to start a container and process the uploaded data.

- Simple E-Mail Service - provides e-mail sending functionality to Cognito.

- Identity and Access Management - provides permissions and authorizations for the various components to run and communicate with each other.

That's not a complete list (12 items), but covers the major parts. If I were creating this from scratch in a monolithic fashion, I could do it with about half the number of discrete technologies (Django, Postgres, Bootstrap, jQuery, nginx, R).

In any case, my big challenge on this job has been to pick up where the last developer left off and finish encapsulating all of the complexity above into a special meta-language that Amazon's developed called Cloud Development Kit (CDK) that allows folks like me to deploy all of these components with a script instead of having to configure and manually set up each one. (CDK also does the valuable work of validating that services are being set up in a structurally sound way. It's pretty nifty and there's a cross-platform analogue called Terraform that I may adopt.)

Now, in this project, the bulk of the challenge has been getting all of these micro-services set up in a way that functions properly, and Amazon's one of these companies that doesn't have a lot of good documentation for all the complexity they've released out into the world. If I'm lucky, I can sometimes find traditional docs like this, but these platforms are constantly changing, and Amazon seems allergic to putting the dates the docs were generated or written on the page. In other cases, the documentation is so sparse and atomized as to be useless. So, my main approach throughout this process has been to consult the source code directly, and when that fails, to consult Amazon's Q AI for assistance.

I started using Q because unless I wanted to spend weeks in an Amazon bootcamp learning the ins-and-outs of the 363 discrete services that they offer, I'd need examples of what I was trying to do to get started. For example, what pieces do I need to have S3 signal when a file was uploaded to queue up a job for a container, with the path of the uploaded file passed to the container as an environment variable? (The answer is Elastic Container Registry, Elastic Container Service, Amazon Event Bridge, and S3 - WITH various permissions updated and internal firewalls opened to talk to each other.)

So, a lot of my usage has been asking Q things like...

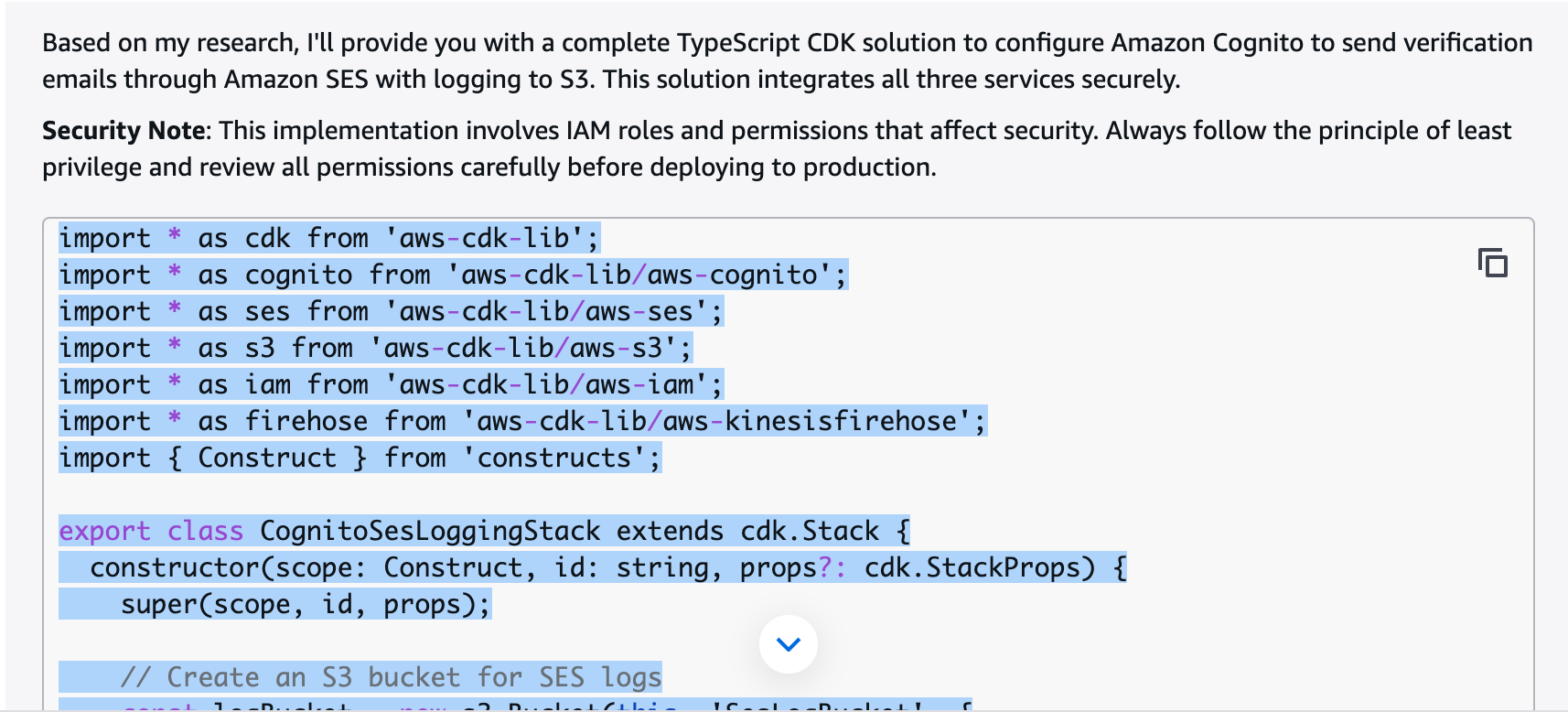

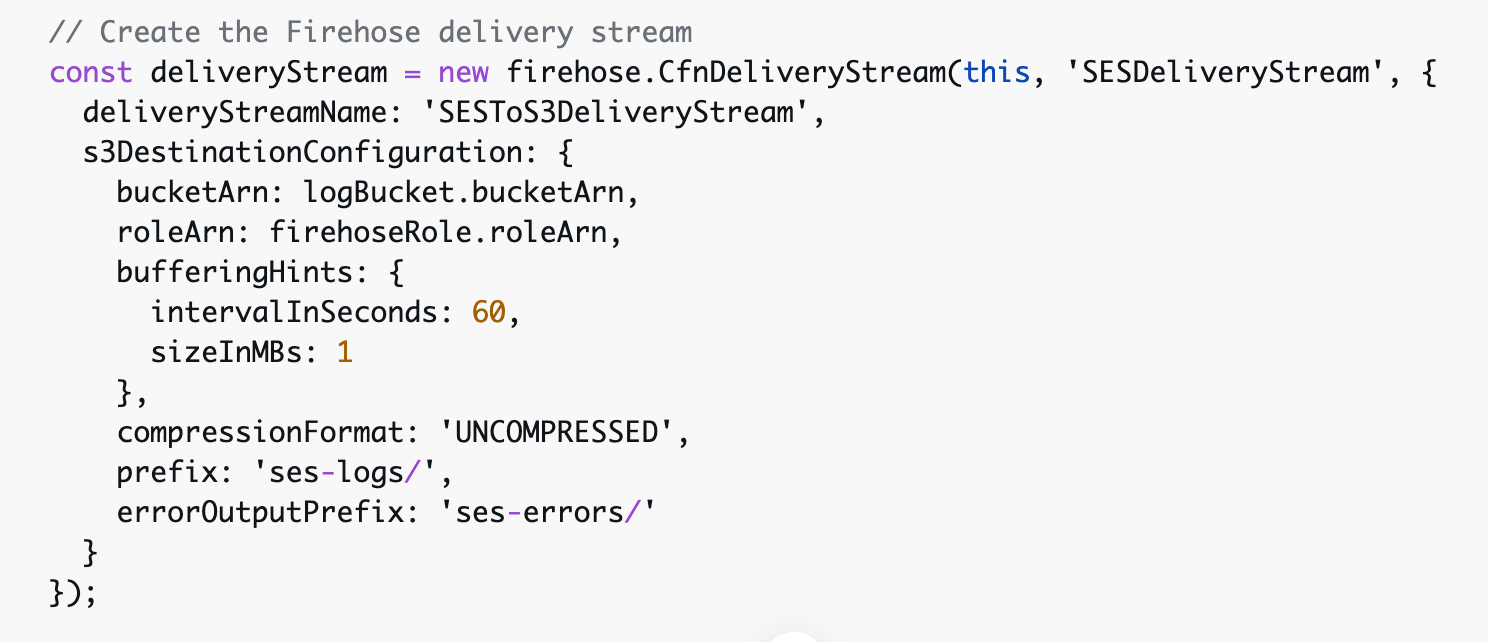

"send verification e-mails from cognito through ses with logging to s3 in cdk typescript"

And Q responds with something like this:

The code goes on for a couple hundred lines. A lot of it is duplicated in my existing CDK script, and there's a lot of unnecessary stuff, like directing S3 to transfer files to Glacier after 90 days. However, it has done a decent job at helping me find what I was looking for, albeit in a very indirect manner.

The code itself isn't always valid or up-to-date, but there are enough clues in it to let me know what I should be looking at, in which case I can go spelunking back into the original code to see what the actual parameters are named.

(And in one case, it sidetracked me for hours, as one of these structures wasn't strongly typed and it kept spitting out answers in camelCase when the correct answers were in PascalCase. I blame that more on Amazon's organizational dysfunction than Q, in this case.)

While the Monolith Guy in me bristles at all the additional complexity added to this project just so someone could avoid setting up a server (and creating a project that is 1000% locked into Amazon), this has been an interesting experience, especially contrasted with the Fresh Comics update.

In that case, I've been living with Fresh Comics since 2011 and know it from top to bottom. The major challenges in that upgrade were determining needed to change moving from Python 2.7 to Python 3.10 and Django 1.11 LTS to 5.2 LTS. Those were pretty mechanical updates to make. On the front-end, after buying and discarding so many front-end themes after they stopped being supported, I decided to just go with vanilla Bootstrap for the HTML redesign, with an eye towards not having to majorly tweak the design for another decade. Most of my existing web work is done in Django, so I know the full stack from top to bottom. When something created an issue, it was pretty easy to locate the problematic code or component and fix the problem, as there really aren't a lot of places for bugs like that to hide from me. Updating Fresh Comics had a lot in common with the Japanese master wood workers who know their tools and materials from top to bottom.

Working in an Amazon-centric world has been a much different experience. I've been using Amazon AWS in various forms for the past twenty years, but mainly as a server host (EC2) and place to park files (S3). I've intentionally avoided any of their technologies that would have locked me into their cloud, so no Lambdas for me. I have a general sense of what all is there, but it's like working with a stranger's toolbox. I recognize some of the tools, some look familiar, and others appear completely alien to me. I'm confident enough in my work to deliver a final product, but I fully expect to be dealing with unanticipated bugs and problems in a way that is simply absent with Fresh Comics.

As for the Q AI, I'm thankful that it was available to me after I'd hit the limits of the existing documentation, but I worry that LLMs like it will become the future of software documentation. No more book-level treatises on entire systems that explain not only what they are, but why they are, but instead we have LLMs trained on source code and existing configurations and code snippets, and we have to interrogate it to figure out how to actually use the technology it's trained on. While some level of documentation will be necessary (the LLMs have to learn too), I fear that knowledge will be shaped to train the Qs of the world first, and the humans second. (Especially as Capital is pushing for AIs to become the new subservient Labor.)

Do I think that AWS expertise is an endangered species? I'm not sure. On one hand, Q accelerated what would have been a longer process for me, but it made a lot of dumb mistakes along the way. But, as others have commented, it had the effect of raising my low AWS skills to somewhere in the moderate AWS skills range, but I also don't think that it's going to capture whatever dao the folks making hundreds of AWS services are embedding into their creations. There's still something valuable about grokking the underlying values and philosophies in a system, and I don't see how the underlying math bridges that gap of understanding (even if that same math is very good at appearing to understand these things deeply).

Overall, I'm a little bit annoyed after my first intensive AI pair-coding exercise. There wasn't a clear win in terms of motivating a behavior change on my part (esp. after the camelCase and PascalCase debacle, solved without Q), and it didn't seem to be all that superior to good traditional documentation, had that existed when I needed it. On the other hand, it wasn't so useless that I can confidently promise that I won't use it ever again (esp. in an Amazon AWS context).

There was a part of me hoping I'd have a Saul on his way to Damascus moment where I'd see the light, or a "Get thee behind me, Satan!" experience where it was so bad it wasn't worth considering again.

Instead, I'm left with a solid "Meh".

I'm about to get started on a new project that has me returning to C++ with some brand new React thrown in. I may give another AI model a spin to help paper over my rustiness with C++ and to cover my Zone of Proximal Development with React, so there'll be another opportunity to see if there's anything there that changes how I build software. I'm generally bearish on that prospect, but I felt similarly about VS Code, which I use extensively now.

Stay tuned.