Note 65: Consumer AI - A simple proposal

Let's learn from the mistakes of the past three decades, instead of repeating them once again.

A good part of my past week has been cursing the darkness that the 2024 technology scene has become: beloved products are being killed (e.g. Google Home through a death of a thousand cuts), enshittification is ascendant, and rather on focus on creating compelling new products that solve users’ problems, the technology industry is treating the good work of the past two decades as a technological Permian Basin - a pristine resource to plunder by the spreadsheet jockeys who have taken over from the innovators who started tech companies in the first place. (The latter, with their fuck you money and tech burnout enjoying life outside the tech grind.)

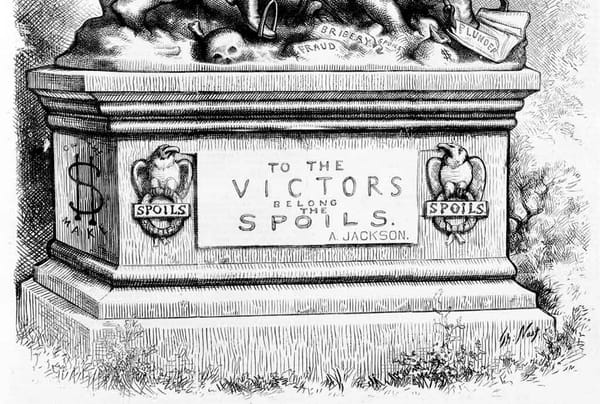

This trend is on full display with the tech industry’s blatant attempts to shove generative AI down consumers’ throats as a way to distract from their stewardship of products that are no longer sexy, and as a way to replay the Apple and Facebook strategies of establishing walled gardens that lock-in users who can be even more savagely exploited for spreadsheet jockeys to hit key KPIs so that they can continue fail (users) upwards in their careers.

While I was grabbing breakfast at my local pancake joint, an article from The Verge popped up on my (useful non-AI) RSS feeds, talking about how this week’s Google I/O conference will see the company baking even more AI into the Android platform:

So far, despite Samsung and Google’s best efforts, AI on smartphones has really only amounted to a handful of party tricks. You can turn a picture of a lamp into a different lamp, summarize meeting notes with varying degrees of success, and circle something on your screen to search for it. Handy, sure, but far from a cohesive vision of our AI future. But Android has the key to one important door that could bring more of these features together: Gemini.

Gemini launched as an AI-fueled alternative to the standard Google Assistant a little over three months ago, and it didn’t feel quite ready yet. On day one, it couldn’t access your calendar or set a reminder — not super helpful. Google has added those functions since then, but it still doesn’t support third-party media apps like Spotify. Google Assistant has supported Spotify for most of the last decade.

While fuming over this article while eating my salad, a simple solution to my frustration revealed itself. Let’s have these company bake in as much AI as they want to, with one simple requirement: leave the door open to users to provide their own AI.

Technically, the solution works this way - everywhere Google bakes into Android a function that uses an AI model of some sort, it’s required to expose that integration point as a publicly-accessable API. If they’re baking predictive text into the Android text views, that’s great - but there is also a setting somewhere where I can pick whether I want to use their default (Gemini) or I can plug in my ChatGPT credentials to let OpenAI’s service do the text prediction or I can plug in an open source model of my own that I train and host (the Home Assistant project is doing good work on this front) or I can plug in a null AI that does nothing, effectively disabling the predictive text feature altogether.

We have precedent for this already with web browsers (even though some companies are being dragged kicking and screaming into compliance, ahem, Apple). In Europe, it’s happening with App Stores and the Digital Markets Act. Rather than repeat all of the learning we’ve already done on those two fronts, how about we skip the “discovering lock-in is bad” part of the process and give consumers the choice from the start, instead of passively watching companies bundle their AIs with their other products, leveraging the other products’ value to impart some of that value onto their new AI? Hell, the US gov’t took Microsoft to court for this in the late ‘90s, and the DoJ is currently dealing with Apple on this front.

While this makes Big Tech’s job a bit more difficult establishing themselves as dominant AI providers, here’s a proposal attempting to light a candle that has some very concrete benefits for everyone else:

- It makes AI providers actually compete on their products’ quality instead of artificially leveraging dominance in other markets to keep up. Siri, ChatGPT, Gemini, and LLaMA compete on their own merits. It provides opportunities for new market entrants to compete on a level playing field, instead of having to also own and control their own underlying user platforms.

- It allows AI providers to differentiate their products on dimensions such as price, performance, and privacy. It gives consumers options to make choices that align with their values.

- It makes AI models portable. If I’m plugging Home Assistant’s model into my Android experience and I spend a good amount of time training it, that work isn’t lost when I migrate over to the iPhone and plug in my existing model there. Similarly, this allows me to share models across devices, so I can actually personalize it for myself, instead of personalizing it for some avatar in a group model that the local AI model thinks looks like me.

- It gives users the ability to opt-out. If I don’t want an algorithmic feed in my social network app, and I don’t care for predictive text, or the AI trying to guide my actions to something that makes the AI provider money, I plug in a null model that effectively disables those features.

- If a company decides that they no longer want to compete in the AI spacebecause it doesn’t align with their business interests (see a decade ago when Google went full-on into social networking, then pulled out), users who continue to find value in those features can continue to use them with alternative providers.

Given how simple this proposal is, it should be relatively simple to enact into law (“generative AI shall not be integrated into consumer products without permitting similar AI systems to be integrated in the same manner”), under the public policy justification of promoting consumer choice, preventing existing monopolies from unfairly abusing their positions, providing new market entrants the same opportunities to compete as the incumbents, and potentially enhancing user data control and privacy.

This seems like a simple user-centered approach to handle the problems and uncertainty integrated generative AI is causing. It leverages the expensive lessons we’ve already learned to avoid problems we’re dealing with now.

Outside of the circle of the folks who are looking to use AI-lock-in as a land grab to capture and control users and markets, what’s the downside?

Thanks for reading Notes from the Void! Subscribe for free to receive new posts and support my work.